Article by Tamer Abuelata, Director of Engineering, PolyCore Software

The Multicore Communications API (MCAPI) was designed to address some of the most common challenges in multicore programming, providing a platform-agnostic standard API for sending and receiving messages between cores. Combined with the right tools, it provides a powerful solution for multicore programming.

The Problem

Today, a multitude of multicore chips are available for every compute application: embedded, desktop and mobile processors, DSPs, hardware accelerators, FPGAs. New chips are released every day, and with more and more features.

But how do I write my application code to take advantage of these powerful devices? And how do I avoid re-writing my code when I need to design in faster, less-costly or less power-hungry silicon alternatives?

The problem becomes even more complex when the systems are running different operating systems. For example, a single device may contain an ARM processor running Linux and a TI DSP running DSPBIOS or no OS (“bare metal”). What communication should be used between cores on different operating systems, and on different chips? And how does the programmer prepare the application code to hide the platform specifics?

To add to the fun, consider the challenge of mixing and matching various transports to optimize data movement (shared memory, DMA, Rapid IO, serial, PCIe, TCP/IP). Should my application logic be concerned with this?

Fortunately, there are solutions out there, ready to tackle my questions head-on, and helping me to take advantage of the innovation in these devices.

What would make this easier?

Ideally, I would just push a magic button and transform code into a fully optimized multicore application. Unfortunately, no one has found that magic button yet. However, there are solutions that exist today that can definitely make multicore life easier.

Obviously, this challenge calls for a suitable programming method. If I want to simplify the programming of multicore systems, I need a method that can span multiple boards, multiple chips, multiple operating systems and multiple transports and scale to many cores. I also need to abstract the details of the underlying hardware from the application code, in order to address portability and code re-use and reduce time-to-market.

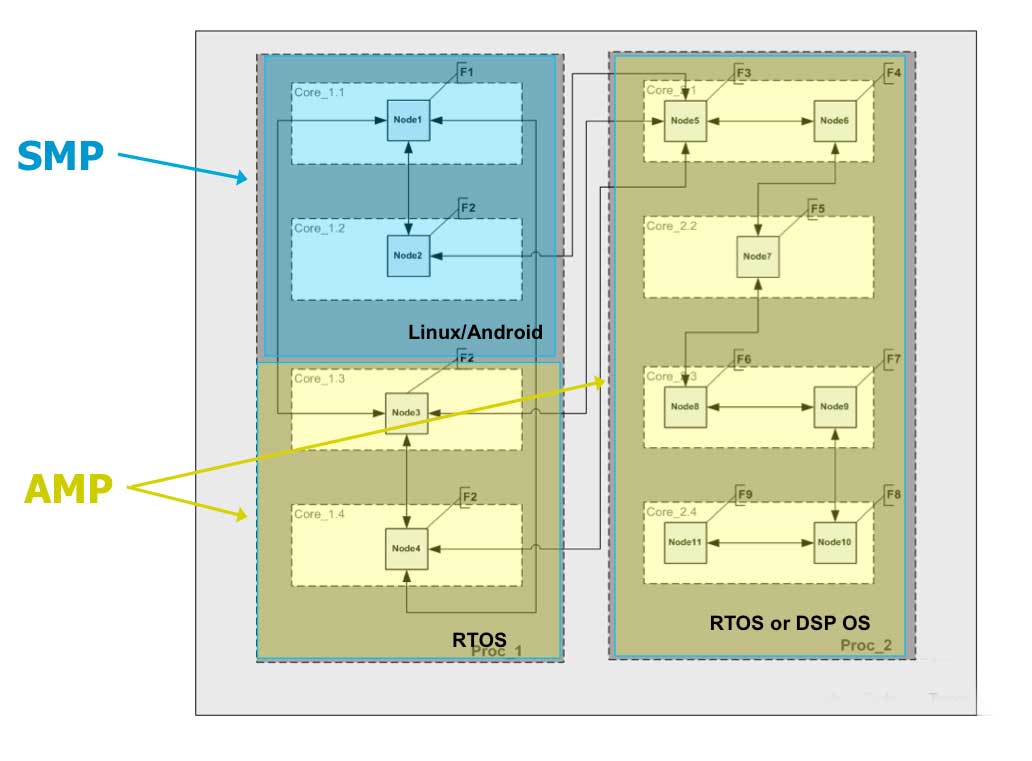

SMP has been a major player in the multicore world. It scales decently on desktop computers with two, four or even eight cores, running unrelated applications. However, past a certain point SMP simply doesn’t scale because it relies on shared resources. SMP also cannot be of use in heterogeneous environments. However, both SMP and AMP have their usefulness. Therefore, our programming paradigm should also be able to include SMP and AMP components.

|

| An example of a heterogeneous topology spanning multiple types of operating systems and different types of processors. |

Message Passing

Message passing has answers to the questions that I previously raised and has been gaining momentum over the years. It simplifies application programming by scaling well (in theory, infinitely) and can help to address the challenges of heterogeneous environments. In a way, it is similar to email in the sense that when an email is sent, users must only specify a destination email address and hit “send.” I do not need to know how the message will get there and what route will be used. The underlying system and network takes care of transporting the message to its destination, and alerting users when the mission fails. One of the nice things about sending an email is that the user does not need to pay attention to what OS, device or email client the recipient is using. Those variants will not affect how the email is written.

Similarly, it would be nice, from the application programmer’s point of view, to just say, “I want to send a message from core A to core B” and to then have an underlying system that would take care of delivering the message, regardless of which OS the destination is running, and regardless of the transport being used to communicate between those two cores. This way, the core type or transport type can later be changed without needing to change application code. The code would say “send this message from A to B.”

Another benefit of message passing is that it inherently provides synchronization. A message only has one owner at any given time. Even if the message doesn’t move, the ownership of the descriptor has a single owner. You can think of it as passing a token around.

Message passing is a well-known, tried-and-tested programming method that is available in most environments locally within an operating system or remotely (e.g., networking). This means that most programmers already know the programming method and require little learning.

The Internet itself is the largest message-passing network. Any device that implements TCP/IP is able to consume or deliver services on the Internet, regardless of hardware or operating system. We could say that the Internet, which for the most part runs on TCP/IP, is a living proof that message passing can scale significantly and easily.

Do Message Passing the Right Way

Standards, such as HTTP, help the Internet become ubiquitous. Similarly, for a multicore application to interoperate in many environments, standards should be used.

Whenever new technologies are adopted, designers should use a careful process of identifying and selecting solutions that will be long-lasting, based on standards and have an active user community. Open standards such as the Multicore Association’s Multicore Communications API (MCAPI) ensure that the application that is built today, will still run tomorrow and will require minimal effort to maintain or port to new platforms in the future. The Multicore Association (MCA) designed MCAPI to address common challenges in multicore communication, targeting closely distributed computing (chip, board, rack). MCAPI is message-passing-based, therefore very scalable and suitable for many-core systems that we can expect in the future. With the belief that message passing is the future of multicore, MCAPI developed a platform-agnostic standard API for sending and receiving messages between cores

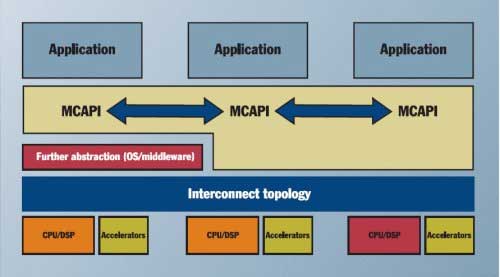

|

| MCAPI is a message-passing API that captures the basic elements of communication and synchronization that are required for closely distributed embedded systems. |

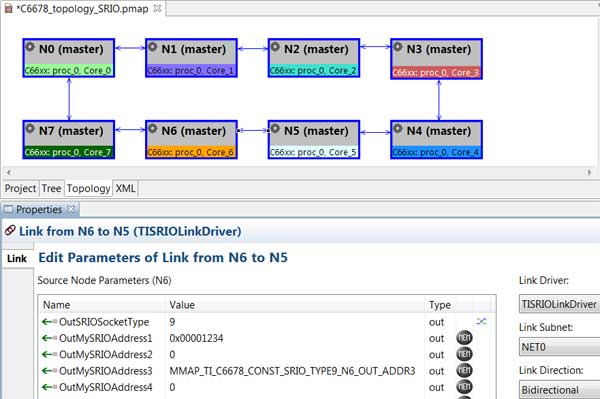

For the sake of efficiency and flexibility, the programming model should allow the programmer to focus on application logic. The processor-specific, OS-specific and transport-specific code would be written by a systems programmer or, by using modeling tools, generated automatically after a configuration effort. Applications developers have the opportunity to minimize, and even with some specific tools, eliminate that part of the coding effort. Using graphical tools and wizards, the programmer would input the platform characteristics using a friendly user interface. From the configuration files and the model, the platform-specific code would be generated to build the underlying communication infrastructure.

|

| Editing the transport used to communicate between two cores by configuring a link driver using a graphical topology editor. |

Such tools are desirable for several reasons. First, tools save time by generating consistent, quality code. Consistent, quality code is key when working in large engineering teams that are spread across the globe. Code generated in China or India will be the same as the code that is generated in the U.S. and Germany for instance. These tools can perform validation of the configuration and catch errors before runtime as well as allow for rapid reconfiguration of the application so that porting to other platforms or scaling up or down can be done easily, quickly and without rewriting application code. In addition, MCAPI-compliant code can be generated to ensure that applications are being built on established standards.

Graphical tools help to visualize the overall system, giving developers the chance to examine the big picture and more easily determine changes that should be made in the coming design cycles. Analyses can be made to verify performance, and to identify problem areas that may need to be reconfigured.

Another key element in providing abstraction is having a runtime engine that transparently delivers messages across multiple transports. The runtime is responsible for balancing performance and resource utilization. Performance goals are to maximize throughput, while keeping the runtime overhead to a minimum. Therefore, in addition to graphical configuration and code-generation tools, it is essential to have a supporting runtime engine – an implementation of MCAPI, optimized for various platforms with support for different transports.

The Solution

To simplify the process of programming for multicore systems, the programming methodology should scale across homogeneous and heterogeneous environments, and support a standard that is both tested and adopted by the programming community. To improve productivity, development tools should be employed, saving time, improving code quality and moving the product to market quickly. The model should allow for easy migration to other multicore systems, should be based on established standards and should remain flexible and agnostic to specifics like OS or processor. Message passing, together with the combination of graphical tools, code-generation and runtime are just the right prescription, and ensures that the benefits offered by multicore are being fully utilized.

Tamer Abuelata, director of engineering for PolyCore Software, has been a part of the team since 2008 and has been a major contributor to the growth of Poly-Platform. Prior to joining PolyCore Software, he co-founded a mobile applications development company focusing on the medical market. He is responsible for the successful launch of three applications since 2010. Additionally, Mr. Abuelata has contributed in engineering roles at BEA Systems, which was later acquired by Oracle, at Handspring/Palm and at Shoretel. He began programming at the age of ten and is now fluent in a plethora of programming languages. In 2008, he earned a bachelor of arts in philosophy from San Jose State University, and currently pursues his interest in linguistics through private study.